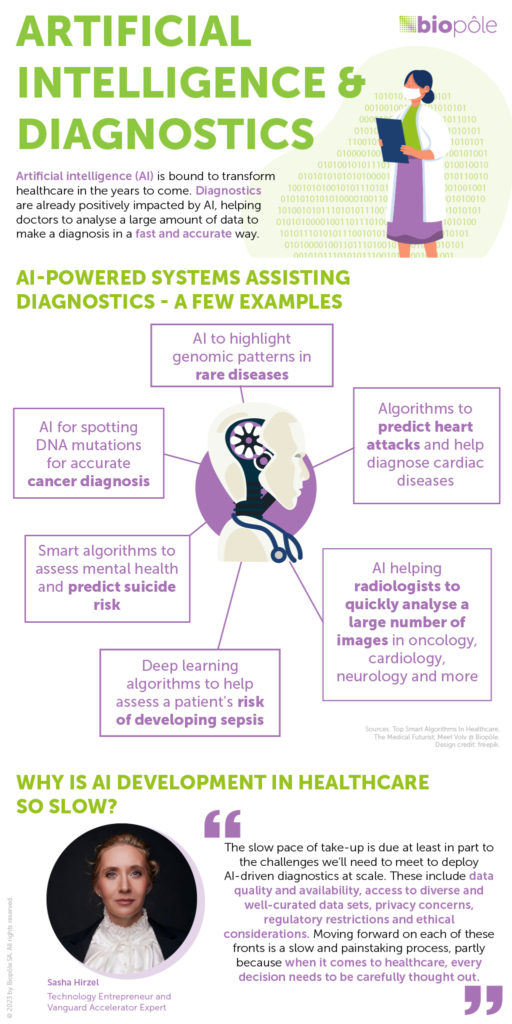

Was the adoption of your AI platform easy or did you come up against resistance?

A few years ago, some radiologists were concerned that our solution might replace them. This was widely relayed in the media and contributed to a certain reluctance among radiologists. It was at the heart of a lot of discussions when we started. But when they started using this technology, their fears were assuaged as it became clear AI was there to assist and help them rather than take over their job. Basically, the application just analyses an image and highlights abnormalities to help radiologists reach diagnoses. For now, it doesn’t consider the patient’s medical history, or even their name or gender. Without the radiologist’s knowledge and expertise, the result could be inappropriate because it lacks this crucial context.

A big challenge to overcome was IT teams’ reluctance to use cloud processing due to concerns around data and access security, mainly in public hospitals. The data processing we use is very demanding in terms of IT resources and can’t really be carried out locally. This generates significant additional costs. That’s why we work in close collaboration with the customer’s IT teams that are installing our solutions to meet all their requirements. These discussions can be quite lengthy, but we always manage to find a way and demonstrate our mastery of this area, which has been thought through and integrated into our solution right from the design stage. Of course, our secure platform is perfectly compliant with European regulations and Swiss law.

There is also an economic aspect to take into account. Today, patients can’t be billed for the use of these solutions, so the institutions have to pay for it, and this can sometimes be a limiting factor. For the new technology to be adopted widely, then, we need to prove the impact that these solutions can have on the organisation – by reducing workload, improving workflow in the emergency department or avoiding the need for additional examinations.

How do you make sure your technology is not biased?

First, it is impossible to eliminate bias entirely when training AI models – we can only minimise it. There is a long list of factors to take into account to achieve this, as our application needs to work in every possible context. We need a training base involving tens of thousands of images from multiple and diverse sources. As well as bringing in diversity in terms of ethnicity, gender and age, these images need to include a mix of normal versus abnormal results, and other factors. The data must also come from a large range of equipment, from different brands and generations of technology, from very old to very new, and from the cheapest to the most state-of-the-art machines. And then the annotations must come from lots of different people. In our case, different hospitals have to provide them, with different amounts of experience, different specialties, etc. We also build strong feedback loops with our first users (before production), all of whom are trained to spot possible bias. Then, at the production stage, we have robust feedback loops with a quick response process (in production) so that any consistently reported failures can be picked up and dealt with.

In all cases, we have a duty to offer high-performing, reliable solutions, so we have to pay close attention to the studies that have been carried out on the use of the applications, or even carry them out ourselves in some cases. Doctors pay a lot of attention to these studies and they challenge us on this. The clinical impact aspect is always the main focus of these discussions.

What do you think about ChatGPT being used as a diagnostic chatbot?

These last months, we have seen the implementation of tools with the exploitation of models coming from the field of ‘large language models’, which can be quite impressive. However, these generative AI models offer no support for logic and semantics from the medical field. As such, they cannot be used as a diagnostic chatbot as the facts and reasoning they generate are sometimes wrong. Some uses for these models are emerging in the medical arena, but they more commonly come in the form of a bot that will manage high volumes of text to reduce paperwork. In other words, in their current form, ChatGPT and similar tools cannot be used for diagnostic purposes, but they can be cleverly engineered for some business purposes in the medical field.

Where does AI have most impact?

Each AI solution has a specific impact: it may be clinical, providing relevant information to support diagnosis, lesion characterisation or quantification, or organisational, offering solutions to automate measurements or longitudinal pathology follow-up. It may even be economic, bringing in new ways to speed up certain procedures.

Take the example of a radiologist analysing the scans of a patient with multiple sclerosis. Currently, they do an MRI of the brain and compare the scan, image by image, to the patient’s previous scan, looking for even the smallest change. It is an incredibly complex and time-consuming ‘spot the difference’ task, as a single pixel that’s different could mean the pathology is evolving. So an application that can do this within a couple of minutes and help provide an answer saves a huge amount of time and also brings more clinical value, for example in the form of quantification, leading to a faster and better diagnosis. This a level of precision that only a machine can reach, thus providing a very powerful tool to look at pathological evolution.

What’s it like to work for a start-up in this fast-moving field?

It’s very exciting for me and my team to provide tools and solutions to doctors and to co-create applications with our partners. We’re very much at the heart of this new generation of solutions and can design them in the way we believe will be most useful for the market. It’s fascinating to have conversations with radiologists and figure out how to meet their needs – there’s nothing more satisfying than feeling that we’re helping them do their jobs and therefore impacting patients.